IEEE Journal of Selected Topics in Signal Processing 2022

Communication-Efficient Federated Learning via Predictive Coding

Kai Yue, Richeng Jin, Chau-Wai Wong, and Huaiyu Dai

NC State University

Abstract

Federated learning can enable remote workers to collaboratively train a shared machine learning model while allowing training data to be kept locally. In the use case of wireless mobile devices, the communication overhead is a critical bottleneck due to limited power and bandwidth. Prior work has utilized various data compression tools such as quantization and sparsification to reduce the overhead. In this paper, we propose a predictive coding based compression scheme for federated learning. The scheme has shared prediction functions among all devices and allows each worker to transmit a compressed residual vector derived from the reference. In each communication round, we select the predictor and quantizer based on the rate--distortion cost, and further reduce the redundancy with entropy coding. Extensive simulations reveal that the communication cost can be reduced up to 99\% with even better learning performance when compared with other baseline methods.

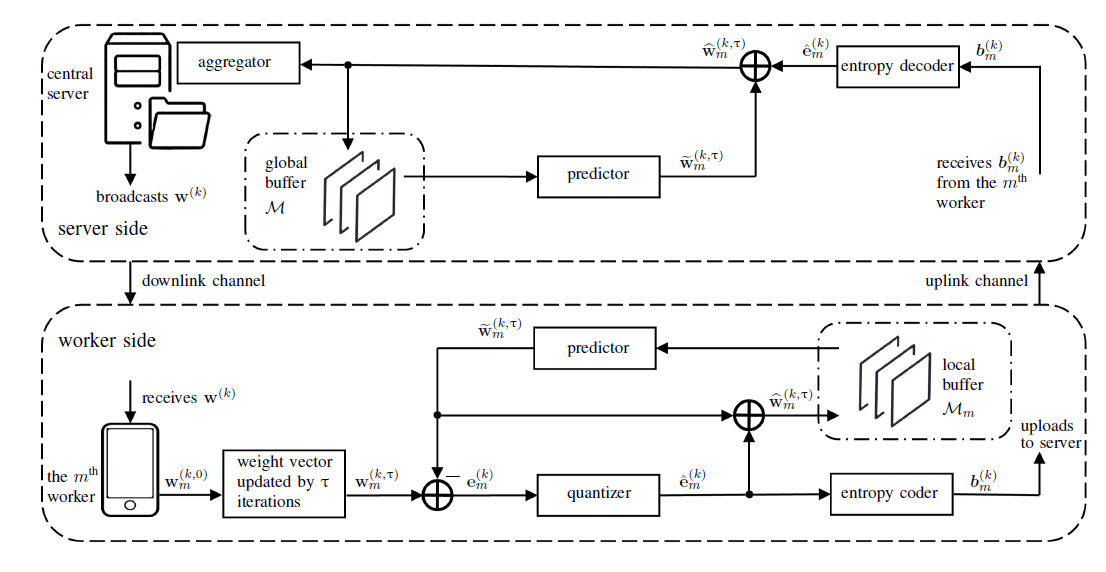

Fig 1. Proposed federated learning scheme via predictive coding. First, each worker receives from the server. Second, each worker performs local iterations to update the model. Each worker will obtain the weight after local iterations, and then use the predictor to estimate the weights based on its local buffer. The residue between updated weight and the predicted version will be fed into the quantizer. The quantized residue is then entropy coded and uploaded to the server. On the server side, the decoding procedure is performed as the inverse operation of the encoder.

Results

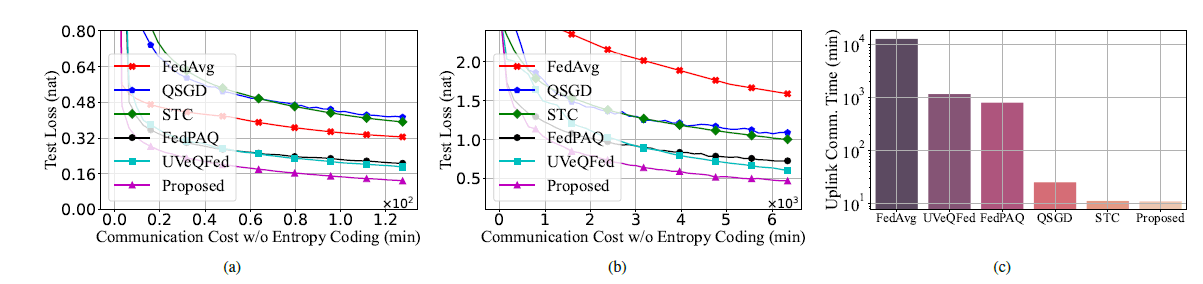

Fig 2. Test loss versus communication cost without entropy coding on (a)~the non-i.i.d. Fashion-MNIST dataset and (b)~the non-i.i.d. CIFAR-10 dataset. The proposed scheme outperforms other methods by achieving the lowest test loss within the same uplink cost. (c)~Uplink communication time to reach 60% test accuracy on the CIFAR-10 task. The proposed scheme is advantageous in bandwidth-constrained scenarios by saving communication time drastically.

Video Tutorial on Predictive Coding

Citation

title={Communication-Efficient Federated Learning via Predictive Coding},

author={Yue, Kai and Jin, Richeng and Wong, Chau-Wai and Dai, Huaiyu},

journal={IEEE Journal of Selected Topics in Signal Processing},

volume={16},

number={3},

pages={369--380},

year={2022},

publisher={IEEE} } }